Any conversions are cached in a temporary folder specified using the environment variableĮXTRACTOR_TEMP_FILE_PATH (/tmp if not specified). Otherwise the original file willīe queued. Preprocessing, it is the conversion that is passed to a queue for extraction. If a file is of a format that needs to be converted in To extraction can also be done in parallel with the extractions.įiles can be a mix of different file formats. Furthermore any file conversions that have to be done as a prerequisite extract method, this one gives the option List of chord changes for the sound file extract_many ( files : List, callback : Optional, None ] ] = None, num_extractors : int = 1, num_preprocessors : int = 1, max_files_in_cache : int = 50, stop_on_error = False ) → List ¶Įxtract chords from a list of files. abstract extract ( file : str ) → List ¶Įxtract chord changes from a particular file Parameters :įile – File path to the relevant file (and if possible can accept file like object) Returns : May take only certain file formats, and integrating conversion logic here enables it to be parallelized. It also provides functionality for sound file conversion, ChordExtractor ¶Ībstract class for extracting chords from sound files. chord : str ¶Īlias for field number 0 timestamp : float ¶Īlias for field number 1 class chord_extractor. If a part of the sound file is non-musical.

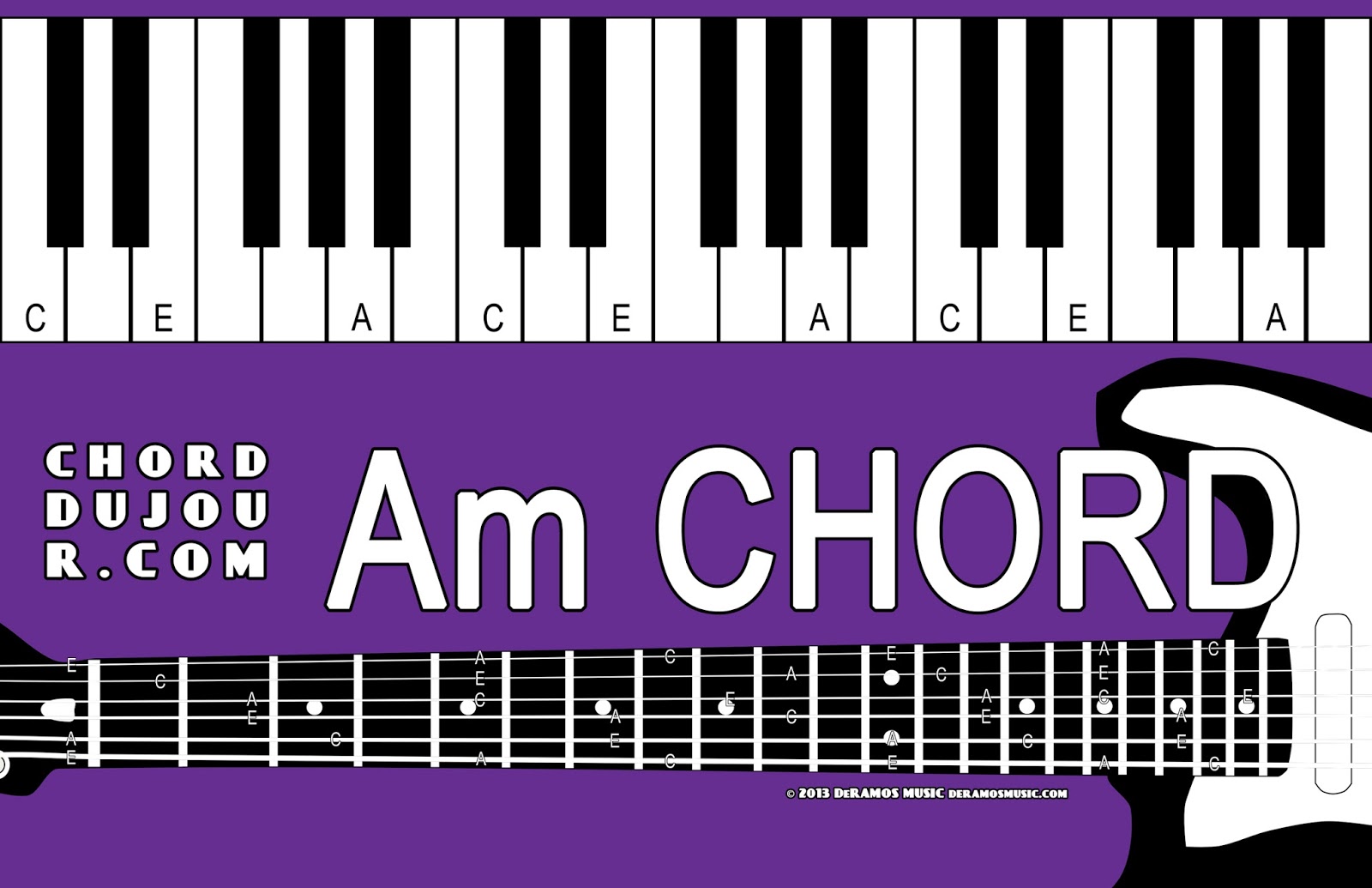

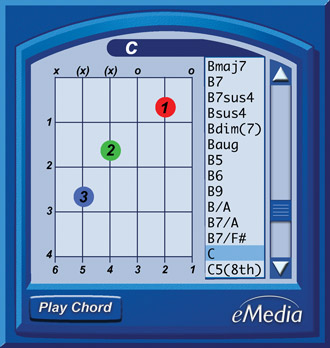

Note that if ‘N’ is given, this denotes no chord, e.g. Examples includeĮmaj7, Dm, Cb7, where maj = major, m = minor, b = flat etc. The string notation used in the chord string is standard notation (and that used by Chordino). Tuple to represent the time point in a sound file at which a chord changes and which chord it changes to. ChordChange ( chord : str, timestamp : float ) ¶

extract_many ( files_to_extract_from, callback = save_to_db_cb, num_extractors = 2, num_preprocessors = 2, max_files_in_cache = 10, stop_on_error = False ) # => LabelledChordSequence( # id='/tmp/extractor/d8b8ab2f719e8cf40e7ec01abd116d3a', # sequence=) class chord_extractor. wav files that have been converted from midi) clear_conversion_cache () res = chordino. to # save the latest data to DB chordino = Chordino ( roll_on = 1 ) # Optionally clear cache of file conversions (e.g. My thesis (Chapter 2) gives a nice overview.From chord_extractor.extractors import Chordino from chord_extractor import clear_conversion_cache, LabelledChordSequence files_to_extract_from = def save_to_db_cb ( results : LabelledChordSequence ): # Every time one of the files has had chords extracted, receive the chords here # along with the name of the original file and then run some logic here, e.g. The most advanced transcribers use automatic tuning, key information, bass note information, and information of the metric position to improve the results. During further processing, too, they tend to differ only slightly, though different time-series smoothing techniques have been used: hidden Markov models, dynamic Bayesian networks, support vector machines (SVMstruct), and conditional random fields - among others. Most of these approaches use a discrete Fourier transform (DFT) to create the initial spectrogram. Try google scholar "chord transcription", or "chord detection", or "chord labelling" for advanced research in this area. It's very important what you do afterwards, and often sophisticated probabilistic models (similar to those in speech recognition: HMMs, DBNs. It turns out that the way you transform from the time domain (normal audio) to the frequency domain (spectral representation) is only of limited importance. grouping these pitches over time so as to be able to assign a chord label to a time interval.finding which pitches are present at any time.In fact, there are two problems (roughly speaking): Then this is actually a problem that is slightly removed from recognising the notes in a piece of audio. If you want a chord representaton of the song similar to this C G Am F7 F6 C. Good chord recognition methods could more aptly be described as "systems", but usually they are indeed based on an initial transform to the frequency domain (most often DFT). The short answer is that you need much more than one algorithm.

0 kommentar(er)

0 kommentar(er)